PageRank - What it is & How it works

What is PageRank

PageRank is the most well known algorithm used by Google, originally developed by Larry Page, and named after its creator. It paved the way for Google’s success as a commercial search engine, and was uniformly adopted in the search industry. In this article we will explore the history of the creation of PageRank, its interpretations, limitations and try to answer the question of why it is still relevant, 26 years after its creation.

History of PageRank

The original PageRank idea wasn't a completely new concept, and stemmed from the attempts to evaluate the influence of scientific publications by counting the number of times these documents were cited in other publications. Larry Page famously applied this idea to the World Wide Web trying to solve the keyword spam issue which essentially rendered many search results useless at the time.

“a document should be important (regardless of its content) if it is highly cited by other documents.”

from the original PageRank patent

PageRank Definition and Interpretation

Most people try to define PageRank as some score or juice flowing from one page to another, or from a higher authority website to a lower authority website. The problem with this definition is that it immediately begs the question: “Where does the initial PageRank come from?”. We can imagine a situation when page A links to page B, and page B links back to page A. What would happen in this case? Would PageRank be flowing in circles?

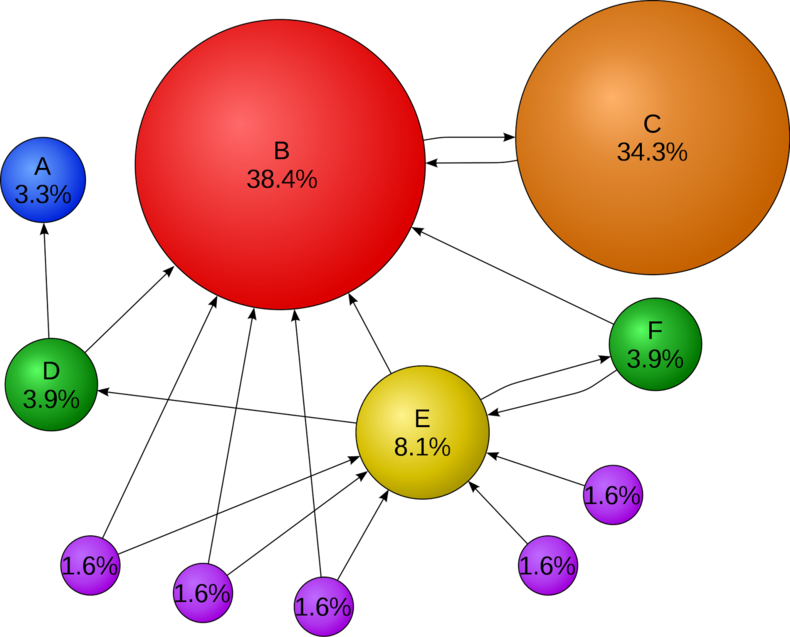

Image source: Wikipedia

To answer this question we need to resort to another interpretation of PageRank. In order for it to work mathematically, we need to think of PageRank in terms of a random web surfer, but what does this mean?

Let’s imagine a situation when a user visits page A. Page A has 3 links to pages B, C and D. A user can randomly click on one of these links, and end up on one of the pages, let’s say, page C. Page C, in turn, can also have multiple links, and our user randomly clicks on one of them. This random clicking behavior is called a random walk.

If we represent the Web as a graph, and this web surfer goes through this graph randomly, we will get a mathematical abstraction called a Markov chain. The interesting property of a Markov chain is, if this random surfer will go from page to page for a long enough time, the probability they end up on a certain page will be constant.

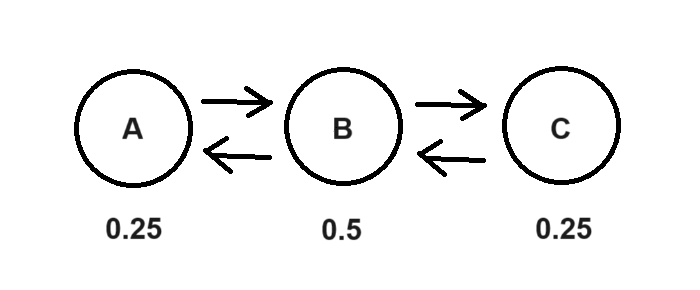

Let’s illustrate it with the simple example

If we start on page B, and we be randomly keep clicking on links for a long time, then in 25% of the cases we will end up on page A, in 50% of the cases we will end up on page B, and in the remaining 25% we will end up on page C.

Therefore, the PageRank for page B is 0.5, and for pages A and C is 0.25. Because users see page B twice as often, it is deemed to have more importance, or more weight, or more PageRank. Another interpretation of it is that page B has higher authority. However, this notion of authority is rather a consequence that comes from the probability model, rather than an underlying principle.

“Looked at another way, the importance of a page is directly related to the steady-state probability that a random Web Surfer ends up at the page after following a large number of links.”

from the original PageRank patent

Why are backlinks important and will they become obsolete?

There is almost constant speculation amongst the SEO community as to whether backlinks have become less important or not. Some people claim that in the near future Google will become smart enough to be able to understand the content so well, that backlinks will become obsolete. Rather than blindly taking either side of the argument, let’s try to reason about it from a technological standpoint.

First of all, to calculate PageRank you will need to do around 50 matrix multiplications. Think of matrix multiplication as multiplying the numbers in rows and columns in an Excel spreadsheet and then summing them together. It’s a very fast and cheap operation, and even with trillions of pages, can be effectively done by multiple computers in parallel in a matter of hours.

Image source: The PageRank Citation Ranking: Bringing Order to the Web

On the other hand, to train a Large Language Model (LLM) such as ChatGPT, requires hundreds of millions of dollars, whole datacenters of computers and months of training. But what you get as a result is a compressed representation of the language and information on the Internet without any notion of authority. This “static model” only reflects what was said on average on the Internet about something.

The goal of Google is to deliver the best possible results to its users. In this regard, backlinks serve as a vote, something similar to likes on social media. The more likes a post receives, the better quality the post is deemed to have. Because PageRank is extremely cheap and fast to calculate, Google can recalculate it on a daily basis, and have a dynamic picture of the preferences, or votes, of its users.

LLMs/AI, on the other hand, are millions of times more expensive and slower to train, and only provide you with a static picture of lexical/concept relationships. Using the social media analogy, think of backlinks as real time likes under a Twitter post, and LLMs/AI as statistics that tells us that, for example, posts mentioning Elon Musk usually are more popular. It is clear that we would prefer to use dynamic data like Twitter likes in order to show the most relevant posts to users.

Looking at it from another angle, Google’s quality raters assess search results on two scales: needs met (relevance) and page quality. Classic Information Retrieval is only concerned with relevance, how good a webpage matches the search query. However, in the real world, people always try to manipulate search results for commercial purposes. That’s why Google always has to fight all sorts of spam, it’s a never ending cat and mouse game. The motivation for the creation of PageRank was to remove low quality results from the results page. PageRank goes into the Page Quality part of the equation, but better understanding content, and matching it with the search query using LLMs/AI goes into the Relevance part. They serve different purposes.

Given all the factors mentioned above, we don’t expect backlinks and PageRank to become less important in the near future.

Is PageRank Still Used Today?

Yes, PageRank is still used in Google’s ranking algorithms today, but it has changed from the original PageRank algorithm of 1997 that we’ve talked about so far.

The core idea remains almost the same, but there have been some changes made to reduce the computational overhead of calculating PageRank, to better model how people really use the web and to combat those who try to manipulate search rankings.

Reducing the Computational Overhead

In 2006 Google filed a patent Producing a ranking for pages using distances in a web-link graph which adds the concept of Seed Pages to the idea of PageRank. Seed Pages are highly authoritative trusted sites, and other pages online can be ranked according to how far away they are in the link graph from these Seed Pages. The closer you are to a Seed Page the better your page can be considered to be.

Adding these Seed Pages allows the algorithms to be more efficient when running, an important factor as the number of pages online was (and is) growing. It also had the added bonus of helping reduce the impact of spam links on the link graph as they are unlikely to be close to the Seed Pages.

Better Modelling How People Use The Web - The Reasonable Surfer

In 2004 Google filed a patent Ranking documents based on user behaviour and/or feature data which introduced the idea of The Reasonable Surfer. The original PageRank model used the Random Surfer model, where any link on a webpage was as likely to be clicked as any other link. However, in practice people are far more likely to click on some links on a page than others, so links in the main navigation are much more likely to be clicked than footer navigation for example. In the Reasonable Surfer model links are weighted depending on how likely they are to be clicked, so a link highly likely to be clicked will pass greater PageRank than links less likely to be clicked.

Combating Search Ranking Manipulation

Ever since it became widely known that links from pages were an important ranking factor then some site owners have tried to build links they wouldn’t have gained naturally to manipulate search rankings.

To combat this manipulation Google has introduced several measures, including allowing site owners to flag whether their links should be ignored for PageRank purposes using the nofollow, sponsored and ugc tags, as well as a number of patents.

In 2004 they filed the Ranking based on reference contexts patent which details methods for identifying whether a link on a page is likely to be link spam or not by looking at the context of the anchor text to the text surrounding it.

Final Thoughts

While much changed from the original 1997 algorithm of Larry Page, PageRank is still an important part of how Google ranks webpages. While many improvements have been made over time to reduce costs, improve results and combat web spam, the core idea of the original patent, that links between pages can be used to determine authority and relevance, remain firm.

References

- Method for node ranking in a linked database (patent)

- PageRank Overview and Markov Chains (video)

- PageRank: A Trillion Dollar Algorithm (video)

- Introduction to Information Retrieval (book)

- Mining of Massive Datasets (book)

- Producing a ranking for pages using distances in a web-link graph (patent)

- Ranking documents based on user behaviour and/or feature data (patent)

- Ranking based on reference contexts (patent)